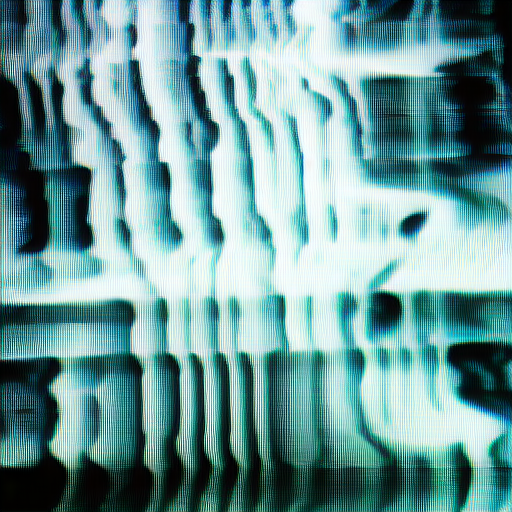

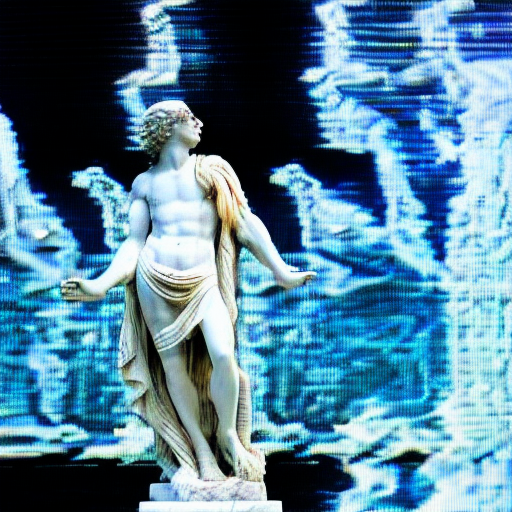

I had the amazing opportunity to present at this year’s FUBAR conference. I decided on a presentation that combined both my interest in glitch art and generative AI. I’ve long been disappointed with how the many generative AI systems struggle at producing images that capture the analog-CRT-glitch aesthetic.

Looking to change this, I finally decided to dive into training my own model to be used with Stable Diffusion. There are several guides available but I didn’t feel like they clearly explained it. Doing a bit more research, I found an online service that will generate the model based on images you provided. As a bonus, it builds off the standard SDv1.5 model so there are base images to create art with.

Using Dreamlook, I created my own SD model using 44 of my analog glitch art images. The results have been amazing!

Check out my whole talk on Youtube where I go into much more detail about the process!