I was very fortunate to get access to two very powerful text-to-image AIs within a short period of time. I had been on MidJourney for a few weeks before my email to join OpenAI’s Dall-E 2 platform arrived. I honestly forgot I even applied to Dall-E so it was a very pleasant surprise.

Dall-E 2 is a much more powerful AI. It produces incredibly detailed renderings that could easily be confused with real life images. The accuracy is quite stunning and frightening at the same time.

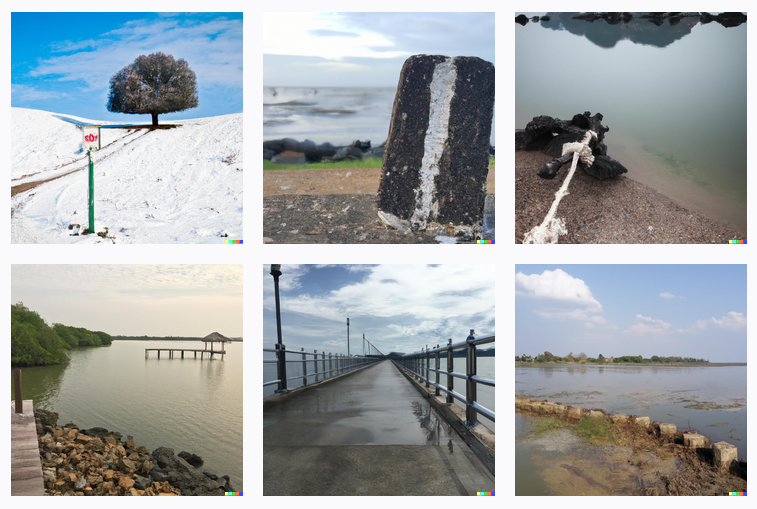

While experimenting with different prompts, I decided to try the same prompt in both systems to see what images they would produce. From these experiments, I got the impression that MidJourney produces more abstract renderings whereas Dall-E is more literal. When using a single word prompt, like “nothing”, MidJourney produced images that invoked the emotion or demonstrated what the meaning of the word was; whereas Dall-E produced images that were literal examples of the word.

As you can see above, the differences are distinct. I know the AI’s were trained on different models, but with working with them it is important to know where their strengths lie.

If I want to create an incredibly realistic looking literal thing I will use Dall-E. If I want more abstract, concept art style rendering, I will use MidJourney to complete that task. Not to say either cannot produces images like the other, but I’ve found them to just be stronger at one thing and suit very different purposes.